Technical decisions

eRecruitment authenticates against an ODS which contains the status and role of the users; these details are then loaded into the authentication principal and used for authorization via the SecurityContextHolder.

The following requirements were must-haves:

- Inactive login (session or otherwise) timeout guarantee.

- Users can store temporary data associated with their login session.

- Users with certain roles would login via the mobile app and others via an Angular SPA, hence token-based authentication was required.

1. could be supported in a stateless manner with the exp claim in JWTs. However, users expecting their login session to be automatically lengthened transparently when they interact with the app is a common UX pattern. This would have required management of the token rotation lifecycle with refresh tokens, and certainly storage and eviction of invalidated tokens if the user logs out.

2. would have required reinventing the concept of a session store anyways; e.g. serializing and deserializing the user's session data to a key-value store like Redis where the key is their user ID.

spring-session supports these two use cases out of the box. The remaining challenge was to figure out if there were other means of passing the session ID besides the default JSESSIONID cookie.

Extremely conveniently, the HttpSessionIdResolver interface allows us to customize the way that the session ID is resolved from any incoming request. In eRecruitment's case, it was as simple as:

class BearerSessionIdResolver : HttpSessionIdResolver {

private val sessionIdHeader = "X-Session-ID"

override fun setSessionId(request: HttpServletRequest?, response: HttpServletResponse?, sessionId: String?) {

response?.setHeader(sessionIdHeader, sessionId)

}

override fun expireSession(request: HttpServletRequest?, response: HttpServletResponse?) {

response?.setHeader(sessionIdHeader, "")

}

override fun resolveSessionIds(request: HttpServletRequest?): List<String> {

//Try loading from Bearer authorization header first, then from X-Session-ID

val headerValue = request?.getHeader("Authorization")

?.takeIf { it.startsWith("Bearer ") }

?.substring(7)

?: request?.getHeader(sessionIdHeader)

return headerValue?.let { listOf(it) } ?: emptyList()

}

}A login endpoint in a @RestController that returned the session ID in a DTO was then written.

After migrating certain instances to KeyCloak, the aforementioned roles were imported as Realm Roles. eRecruitment then extracts the roles and scopes from the KeyCloak-issued JWT as follows:

http

...

.oauth2ResourceServer { oauth2 ->

oauth2.jwt().jwtAuthenticationConverter(JwtAuthenticationConverter().apply {

setJwtGrantedAuthoritiesConverter { jwt ->

val scopes = (jwt.claims["scope"] as? String)?.split(" ")

?.map { SimpleGrantedAuthority("SCOPE_$it") }

?.toSet() ?: emptySet()

val roles = (jwt.getClaimAsMap("realm_access")?.get("roles") as? List<*>)

?.filterIsInstance<String>()

?.map { SimpleGrantedAuthority("ROLE_$it") }

?.toSet() ?: emptySet()

scopes + roles

}

})

}This allowed SpEL expressions like @PreAuthorize("hasAnyRole('ADMIN', 'SUPERADMIN')") to work seamlessly.

Certain attributes (e.g. phone number and other identification) that would belong in a Profile entity were migrated to KeyCloak User Attributes for other related applications to consume after granting them access to KeyCloak's REST API with service accounts. Having KeyCloak expose new endpoints to filter or query for those attributes was as simple as implementing a new Service Provider Interface, in particular RealmProvider for realm-wide attributes.

Many of these attributes were often-read but very rarely written to, such as "Introducer of the agent" or "Agent code." Naturally, to avoid a round trip to KeyCloak every time the user accessed their profile details, these were also cached with an appropriate TTL using Spring's RedisCacheManager.

After containerizing eRecruitment, Traefik with the Docker provider was used as a self-contained ingress solution.

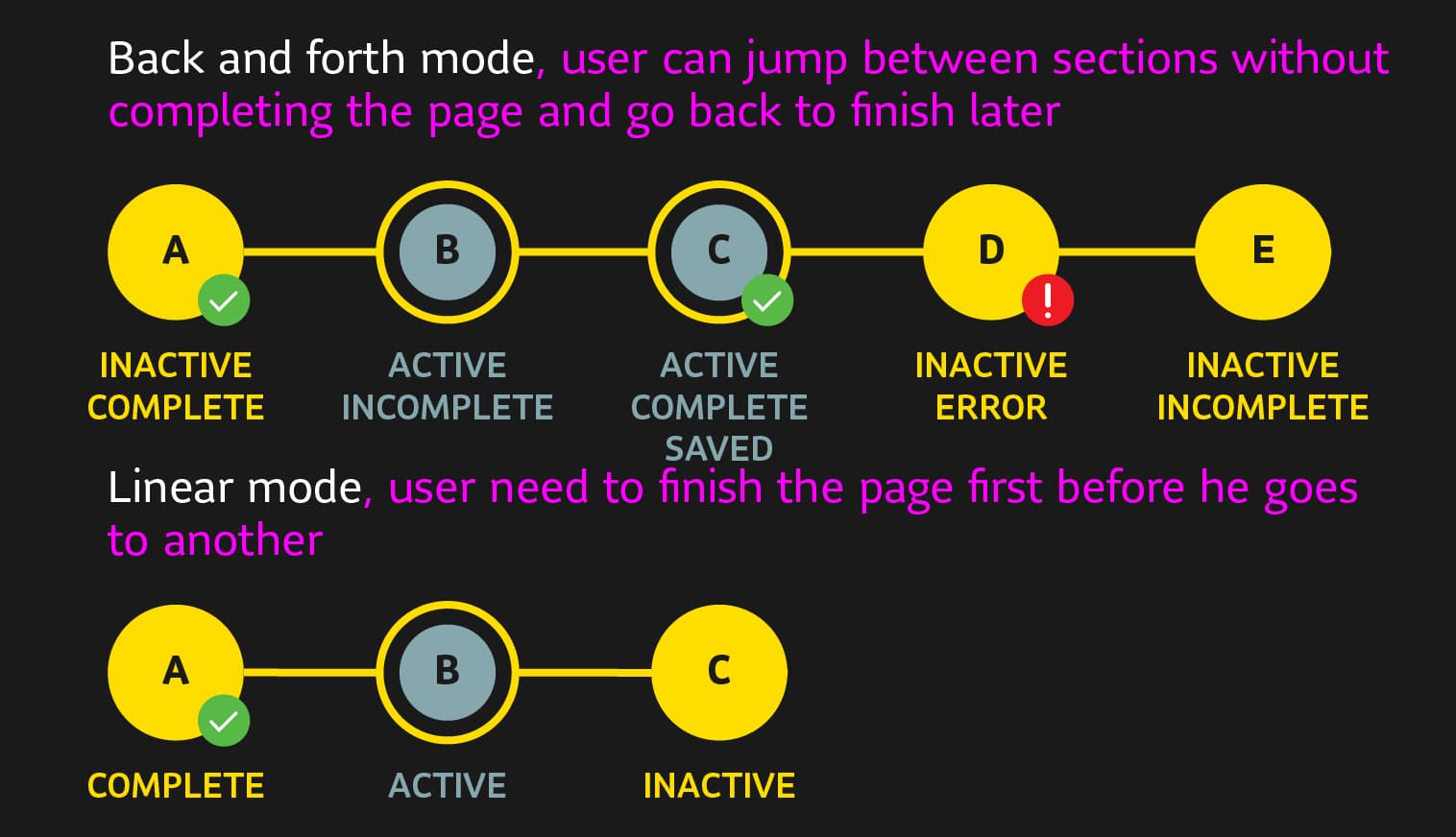

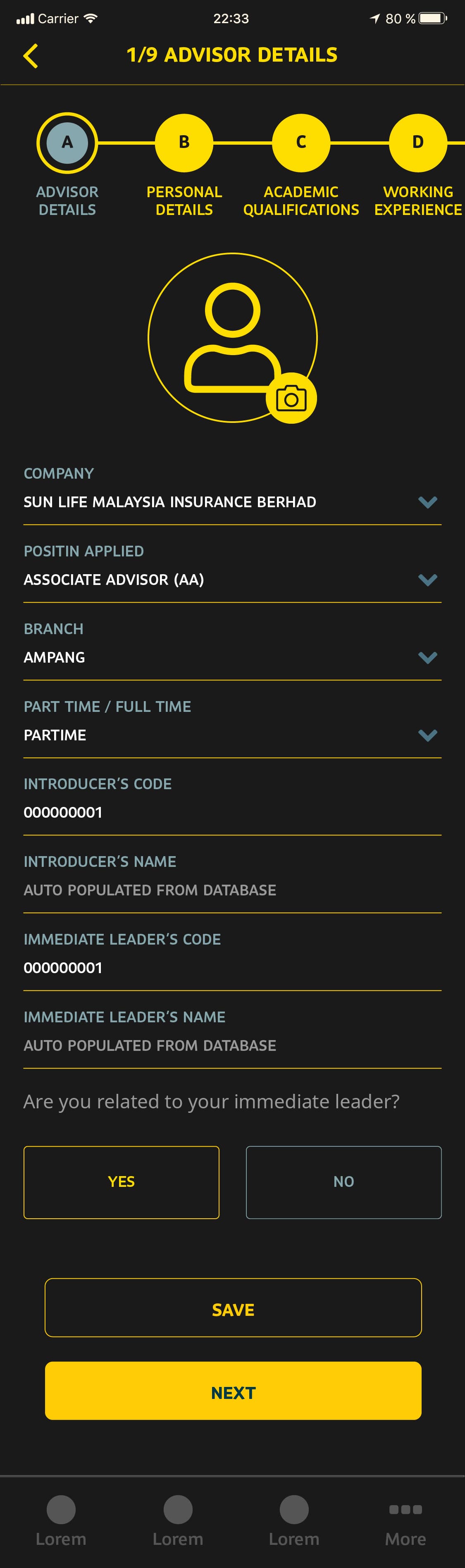

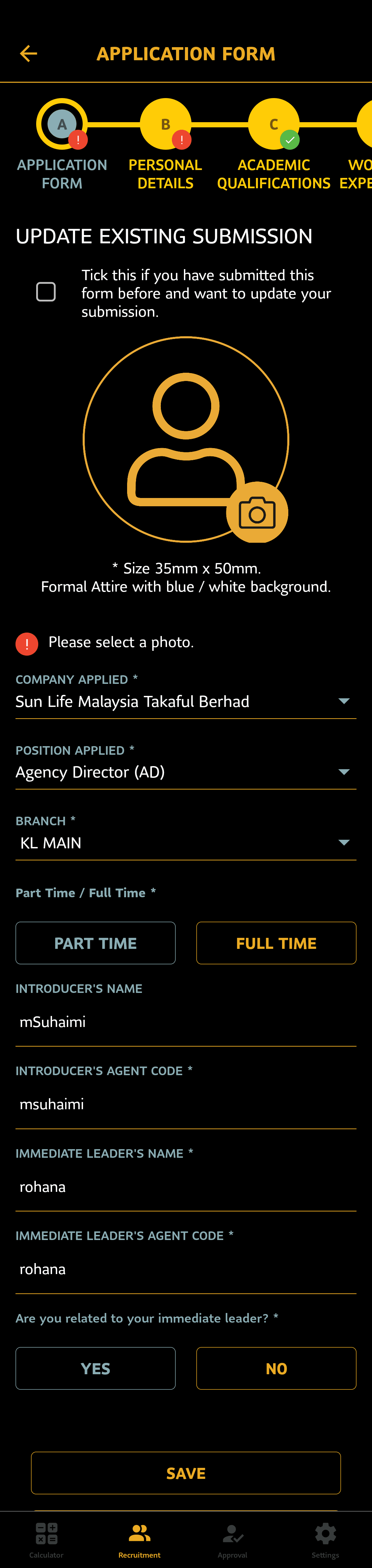

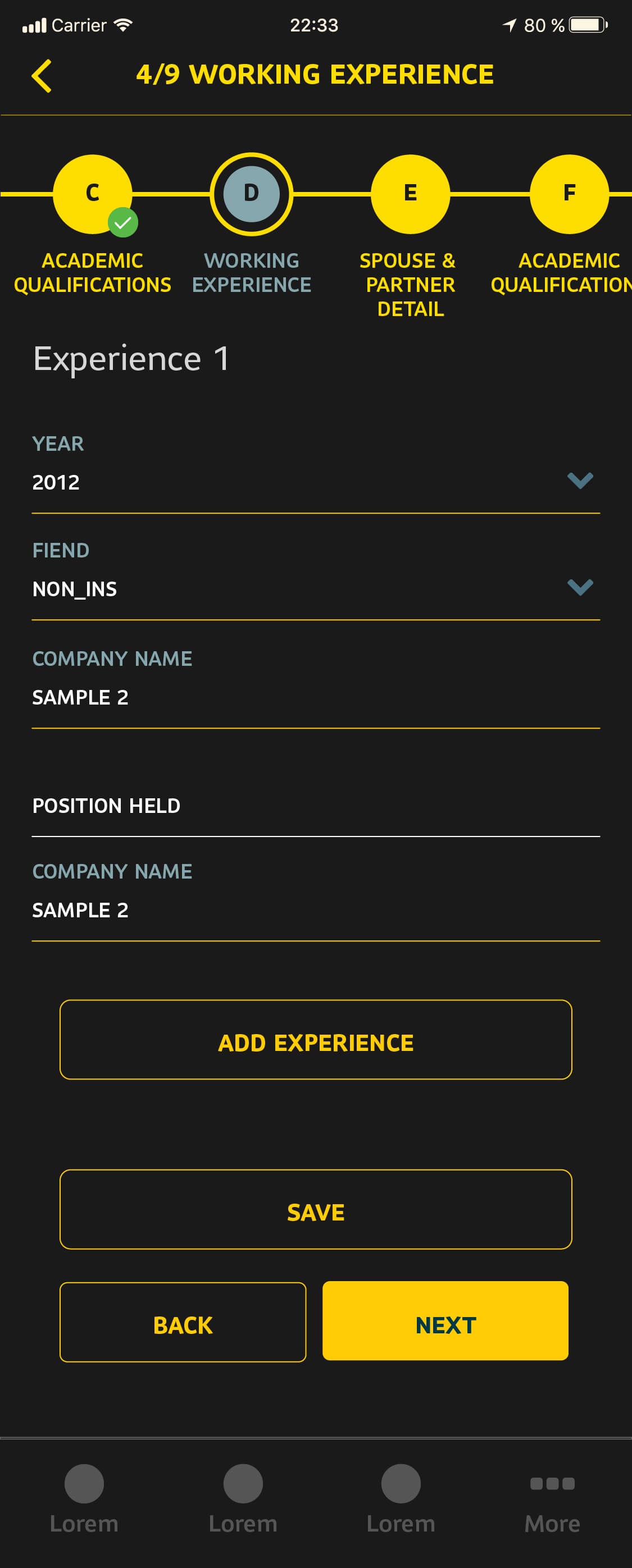

UI implementation notes

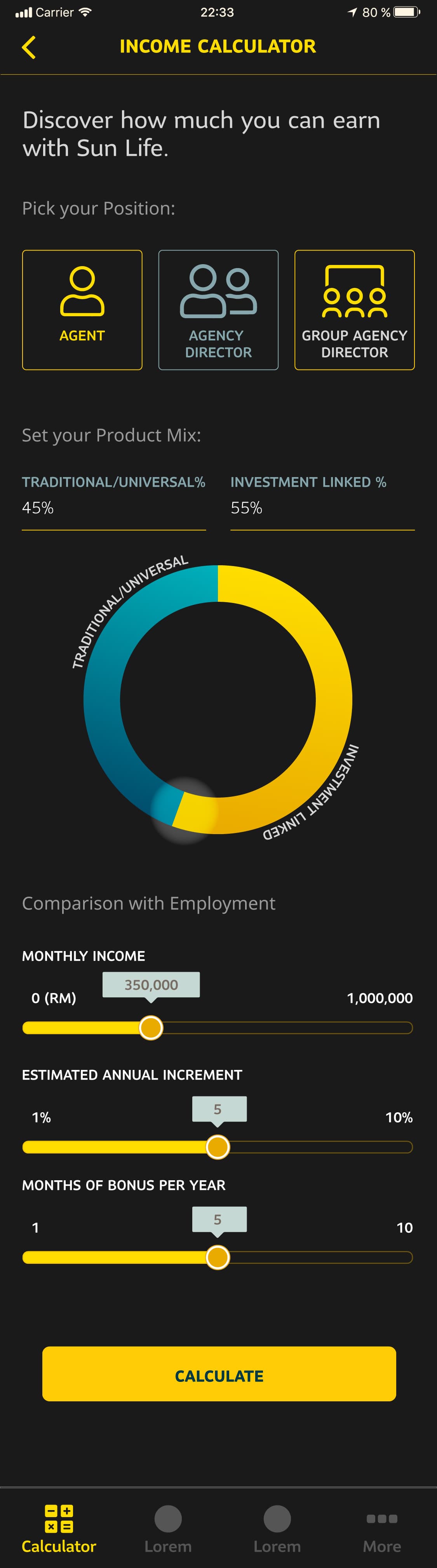

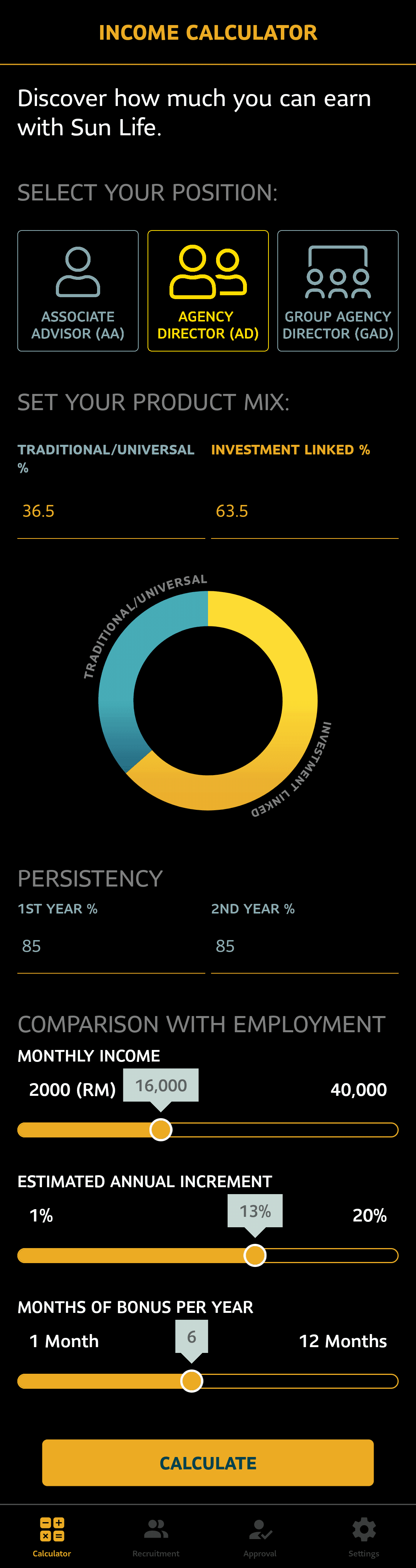

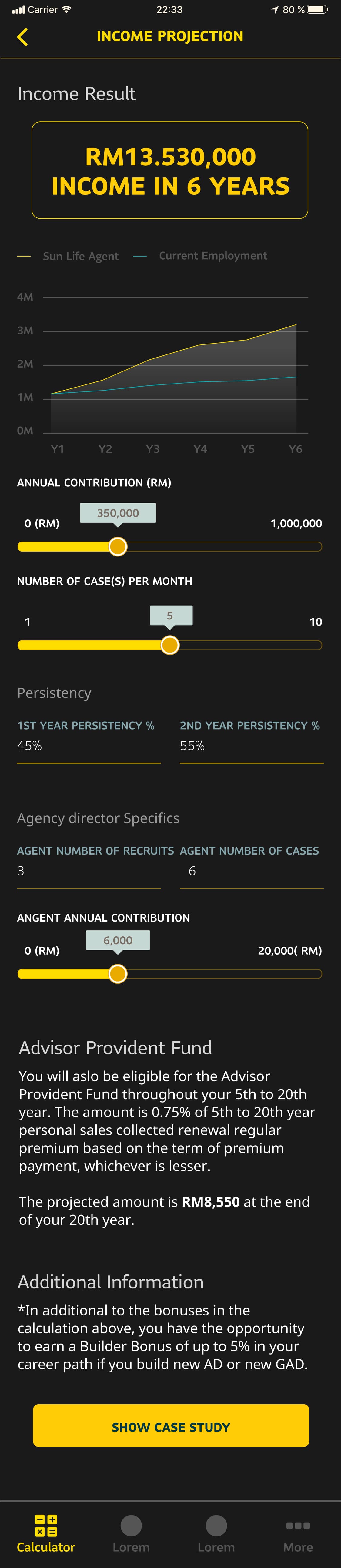

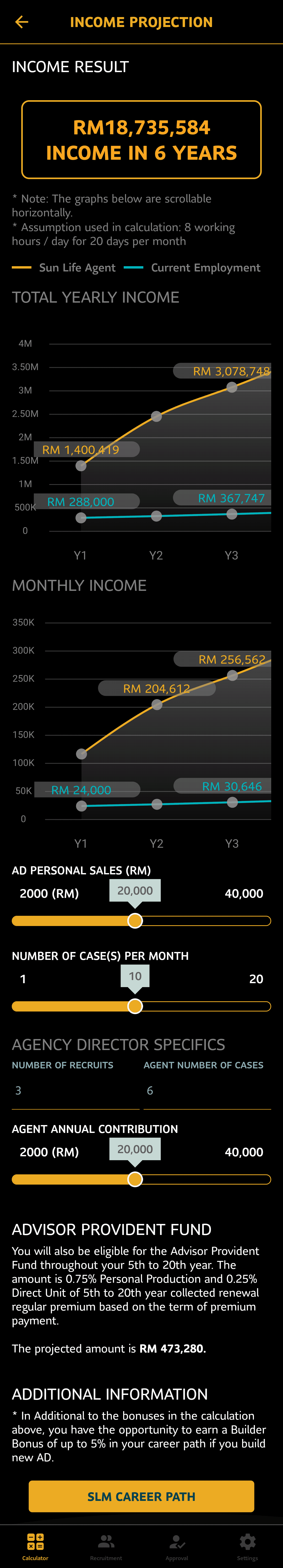

After the mobile prototype was accepted, iterated upon the UI with the regional design team to strike a balance between usability, feasibility of implementation, and embodying their design philosophy.

This screen, for instance, mostly involved using RN's Animated and PanResponder libraries for peak performance; in particular Animated.Value.interpolate to derive the position at which UI elements like the slider tooltip / handles or the graph should be (the graph changes in real time as the users interact with the sliders), and calculating the angle with respect to 0° and the center of the interactive donut in the onPanResponderMove callback.

Several libraries like react-hook-form and Formik that could do validation and form state management were considered in light of these requirements. However, these factors had to be kept in mind:

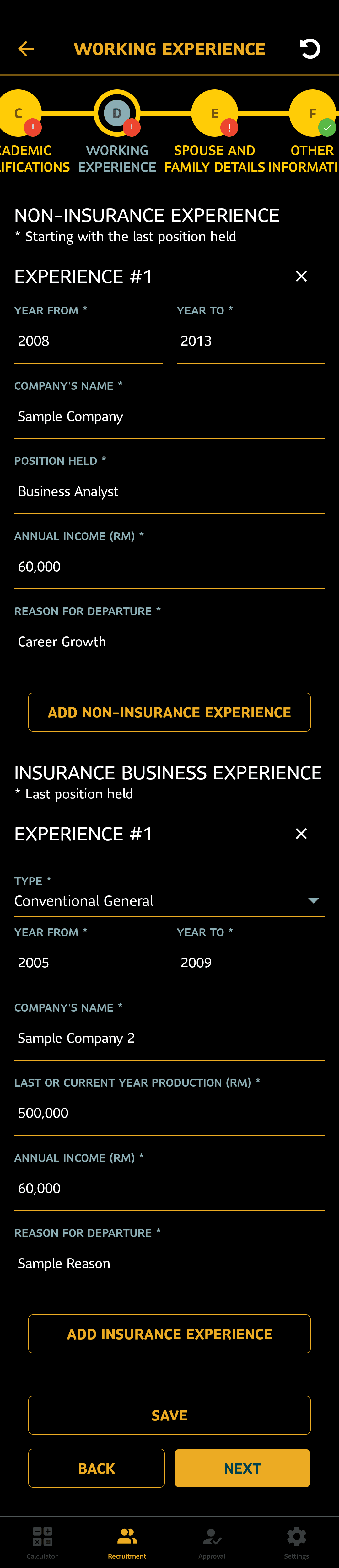

- The form was composed of 9 sections (A - I), with 60+ input fields. The validity of section I conditionally depended on the state of fields in A and B. The number of subsections in sections C and D depended on the state of fields in A.

- The user would be logged out client-side too after an inactivity period (e.g. if they were in the middle of a discussion with the candidate). However, immediately wiping their data would be bad UX; they should be able to continue where they left off if they logged in again with the same account.

- Images and files were to be temporarily stored within app-managed data on disk before the final submission due to this.

- Fine control over the management and purging of data at rest (e.g. the device was lost) due to data compliance policies was required.

- The user should be able to revert to the last-saved version of the form in case of accidental changes.

- Each section of the form was a different route in a TabNavigator, i.e. they were independent in terms of the component hierarchy, and not all sections might even be mounted at any given moment.

Due to 1, 3 and 4, the form had to be manipulated in memory and only flushed to disk when the user tapped "Save" or the session went idle and the in-memory form was in a valid state.

react-native-form keeps form state in the (virtual) DOM; i.e. prefers uncontrolled inputs for performance reasons. It also supports controlled components by means of a Controller HOC, which depends on a control variable returned from the useForm hook which initializes the form. Given that eRecruitment made extensive use of controlled inputs (e.g. dropdowns and keeping track of whether a form section was "dirty"), this would have required initializing and exposing the form in a globally accessible way via Context or Redux anyways.

The effort to work around the abstractions of said libraries would have been significant, hence the decision was made to store the form data directly in Redux and write pure actions that created new forms, deleted forms, wrote to or erased a particular form field, etc. in a reducer.

export const createForm = () => {

return async (dispatch, getState) => {

// actually write to realm DB, generate a UUID

let newFormId = createRealmForm();

// create file storage area

await createFormFileFolder(newFormId);

dispatch({

type: FORM_STATE_ACTIONS.CREATE_FORM,

formId: newFormId,

});

return newFormId;

};

};

export const deleteRealmForm = (formId) => {

let realmInstance = getRealmInstance();

realmInstance.write(() => {

SCHEMAS[SCHEMAS.length - 1].schema.forEach((schema) => {

let _object = realmInstance.objectForPrimaryKey(schema.name, formId);

realmInstance.delete(_object);

});

});

};

export const deleteForm = (formId) => {

return async (dispatch, getState) => {

deleteRealmForm(formId);

// delete file storage area

await deleteAllFormData(formId);

dispatch({

formId,

type: FORM_STATE_ACTIONS.DELETE_FORM,

});

};

};

// for use case 5. above

export const resetSectionData = (formId, schemaName) => {

let realmInstance = getRealmInstance();

let _object = realmInstance.objectForPrimaryKey(schemaName, formId);

let object = fromRealmObject(_object);

object['modified'] = false;

return {

type: FORM_STATE_ACTIONS.RESET_FORM_SECTION_DATA,

payload: {schema: schemaName, formId, data: object},

};

};

...

export const newEFormsReducer = (state = initialState, action) => {

switch (action.type) {

case FORM_STATE_ACTIONS.CREATE_FORM:

let newState = {...state};

newState.forms = {...state.forms};

newState.forms[action.formId] = {};

SCHEMAS[SCHEMAS.length - 1].schema.forEach((schema) => {

let realmInstance = getRealmInstance();

let _object = realmInstance.objectForPrimaryKey(

schema.name,

action.formId,

);

let object = fromRealmObject(_object);

object['modified'] = false;

newState.forms[action.formId][schema.name] = object;

});

return newState;

case FORM_STATE_ACTIONS.DELETE_FORM:

newState = {...state};

delete newState.forms[action.formId];

newState.forms = {...state.forms};

return newState;

case FORM_STATE_ACTIONS.UPDATE_FORM_SECTION_DATA: {

let {formId, schema, key, data} = action;

let newForms = {...state.forms};

newForms[formId][schema][key] = data;

newForms[formId][schema].modified = true;

// one of the other schemas has been modified.

newForms[formId][FORMS_SCHEMA].modified = true;

return {

...state,

forms: newForms,

};

}

case FORM_STATE_ACTIONS.RESET_FORM_SECTION_DATA: {

const {formId, schema, data} = action.payload;

let newForms = {...state.forms};

newForms[formId][schema] = data;

newForms[formId][FORMS_SCHEMA].modified = _isFormReallyModified(

newForms[formId],

);

return {

...state,

forms: newForms,

};

}

case FORM_STATE_ACTIONS.MARK_FORM_SUBMITTED:

const {formId} = action;

let newForms = {...state.forms};

newForms[formId][FORMS_SCHEMA].submitted = new Date();

return {

...state,

forms: newForms,

};

...

}

return state;

};

Realm DB was settled upon as the on-disk storage mechanism because it allows schemas to be defined and versioned as objects in TypeScript, which conveniently allowed types to be reused within the components themselves and when fetching submitted forms from the API. Migrations are also done in code in situations such as application start or a schema version bump.

Production

The top-right circular icon in the header of the Material Top Tabs navigator is a component connected to the Redux store. It is shown if the focused form has been modified but not saved and reverts the form to its previous state.